The Algorithm Behind AI’s Learning Ability

The Algorithm Behind AI’s Learning Ability

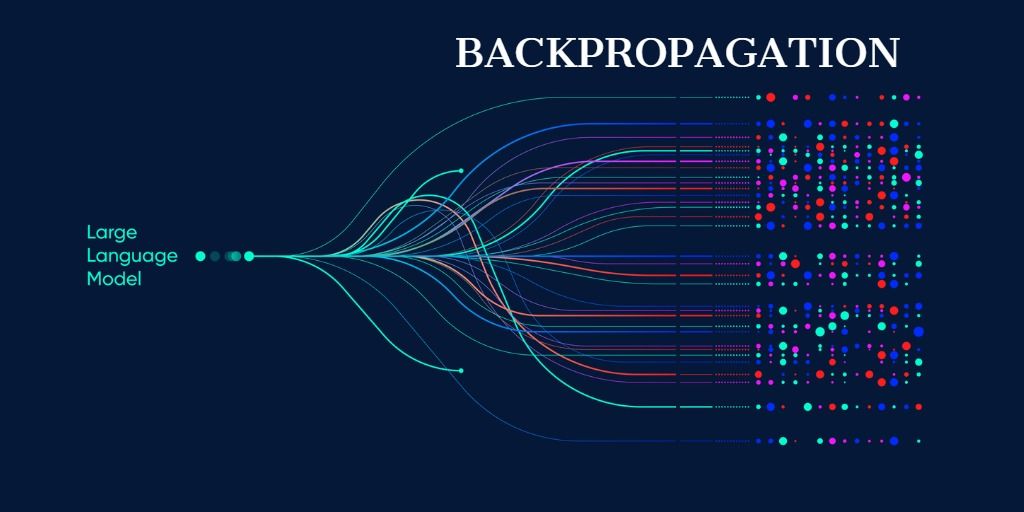

Understanding Backpropagation

Backpropagation is an important approach in machine learning for training artificial neural networks (ANNs). Backpropagation allows for the fine-tuning of network weights by estimating the gradient of the loss function. This iterative process is critical for neural networks to learn from data and continually improve their capacity to execute a variety of jobs. Backpropagation's effectiveness and adaptability have made it a vital tool in a wide range of domains, including image recognition, audio processing, and natural language processing. It enables neural networks to adapt to complicated input patterns, resulting in advances in artificial intelligence that affect everyday life, such as voice-activated assistants and automatic picture tagging. To appreciate the relevance of backpropagation, first consider its function in the learning process. During training, a neural network generates predictions and then uses a loss function to calculate the difference between expected and actual results. Backpropagation works by determining how much each weight contributes to the reduction, allowing for modifications that reduce inaccuracy. The network improves its prediction accuracy over time as it iterates. Because of its versatility, this method may be used in a variety of neural network topologies, ranging from simple feedforward networks to deep convolutional and recurrent networks, making it a key component in the creation of intelligent systems. Backpropagation, whether used to improve computer vision or enable natural language comprehension, is at the heart of current machine learning advances.

Gradient Descent and Advanced Optimization Techniques

Backpropagation utilizes gradient descent to minimize the loss function by iteratively altering the network's weights in the direction of the steepest fall. This procedure facilitates the neural network's learning and enhances its performance with each repetition. Various optimization strategies, such as the Grey Wolf Algorithm and Particle Swarm Algorithm, have been investigated to enhance the efficiency of backpropagation. These methods provide alternate strategies for determining appropriate weight modifications, perhaps accelerating the learning process. A notable advancement is the dynamic learning rate (DLR), derived from biological synaptic competition. This method modifies the learning rate according to the existing weights of the neural connections, enabling the network to accelerate learning by precisely adjusting the rate of weight modifications in relation to the learning advancement.

Istock: KrotStudio

These sophisticated strategies enhance backpropagation, rendering it a more efficient and effective instrument for training neural networks.

References and Suggested Reading

1. Rabczuk, T. and Bathe, K.J., 2023. Machine Learning in Modeling and Simulation. Springer Cham, Switzerland, 10, pp.978-3.

2. Chong, S.S., Ng, Y.S., Wang, H.Q. and Zheng, J.C., 2024. Advances of machine learning in materials science: Ideas and techniques. Frontiers of Physics, 19(1), p.13501.

3. Poole, D.L. and Mackworth, A.K., 2010. Artificial Intelligence: foundations of computational agents. Cambridge University Press.

4. Gómez-Bombarelli, R., 2018. Reaction: the near future of artificial intelligence in materials discovery. Chem, 4(6), pp.1189-1190.